In this article we are going to point out some objective strengths of web server log analysis compared to JavaScript based statistics, such is Google Analytics. Depending on your preferences and type of the website, you might find some or all of these arguments applicable or not. In any case, everyone should be at least aware of differences in order to make a right decision.

1. You don’t need to edit HTML code to include scripts

Depending on how your website is organized, this could be a major tasks, especially if it contains lot of static HTML pages. Adding script code to all of them will surely take time. If your website is based on some content management system with centralized design template, you’ll still need to be careful not to forget adding code to any additional custom pages outside this CMS.

2. Scripts take additional time to load

Regardless of what Google Analytics officials say, actual experiences prove otherwise. Scripts are scripts and they must take some time to load. If external file is located on a third-party server (as it’s the case with Google Analytics), the slowdown is even more noticeable, because visitor’s browser must resolve another domain.

As a solution they suggest putting inclusion code at the end of the page. Indeed, in that case it would appear that page is loaded more quickly, but the truth is that there’s a good chance that visitor will click another link before script is executed. As a result, you won’t see these hits in stats and they are lost forever.

3. If website exists, log files exist too

With JavaScript analytics, stats are available only for periods when code was included. If you forget to put code on some pages, the opportunity is forever lost. Similarly, if you decide to start collecting stats today, you’ll never be able to see stats from yesterday or before. Same applies to goals: metrics are available only after you decide to track them. With some log analyzers, you can freely add more goals anytime and still be able to analyze them based on log files from the past.

4. Server log files contain hits to all files, not just pages

By using solely JavaScript based analytics, you don’t have any information about hits to images, XML files, flash (SWF), programs (EXE), archives (ZIP, GZ), etc. Although you could consider these hits irrelevant, they are not for most webmasters. Even if you don’t usually maintain other types of files, you must have some images on your website, which could be linked from external websites without you knowing anything about it.

5. You can investigate and control bandwidth usage

Although you might not be aware of it, most hosting providers limit bandwidth usage and usually base their pricing on it. Bandwidth usage costs them and, naturally, it most probably costs you as well. You would be surprised how much domains (usually from third-world countries) poll your whole website on a regular basis, possibly wasting gigabytes of your bandwidth every day. If you could identify these domains, you could easily block their traffic.

6. Bots (spiders) are excluded from JavaScript based analytics

Similar as previous point, some (bogus) spiders misbehave and they are wasting your bandwidth, while you don’t have any benefit from them. In addition, server logs also contain information about visits from legitimate bots, such are Google or Yahoo. By using solely JavaScript based analytics you have no idea how often they come and which pages they visit.

7. Log files record all traffic, even if JavaScript is disabled

Certain percentage of users choose to turn off JavaScript, and some of them use browsers that don’t support it at all. These visits can’t be identified by JavaScript based analytics.

8. You can find out about hacker attacks

Hackers could attack your website with various methods, but neither of them would be recorded by JavaScript analytics. As every access to your web server is contained in log files, you are able to identify them and save yourself from damage (by blacklisting their domains or closing security holes on your website).

9. Log files contain error information

Without them, in general case, you don’t have any information about errors and status codes (such are Page not found, Internal server error, Forbidden, etc.). Without it, you are missing possible technical problems with your website that lower overall visitor’s perception of its quality. Moreover, any attempt to access forbidden areas of your website can be easily identified.

10. By using log file analyzer, you don’t give away your business data

And last but not least, your stats are not available to a third-party who can use them at their convenience. Google has bought all rights for, at that time, popular and quite expensive web statistics product (Urchin), repackaged it, and then allowed to anyone to use it for free. The question is: why? They surely get something in return, as Google Analytics license agreement allows them to use your information for their purposes, and even to share it with others if you choose to participate in sharing program.

What could they possibly use? Just to give few obvious ideas: tweaking AdWords minimum bids, deciding how to prioritize ads, improving their services (and profits) – all based on traffic data collected from you and others.

Related links

Busting the Google Analytics Mythbuster

Which web log analyzer should I use?

What price Google Analytics? (by Dave Collins)

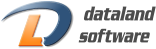

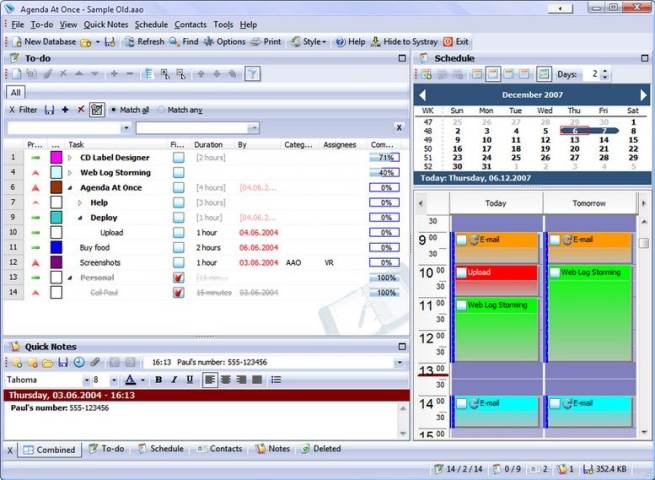

Web Log Storming – an interactive web log analyzer

Software Development

Software Development Web Design and Programming

Web Design and Programming Examples of our Work

Examples of our Work

“Opinions are like noses, everyone has one”

“Opinions are like noses, everyone has one”